Demonstrating Learning

and leveraging AI

I have decided that I will write a whole post about Formative Assessment and my idea, but I wanted to build something before I do. So it will probably be the next post after this one, or the one after that. But before I put everything down I wanted to try out at least part of the idea to test its feasibility. Until then though let's talk about Learning Demonstrations.

Why do we have homework? Well it is a form of assessment and a form of practice. But really, what we should be using homework for is to demonstrate the learning of material. There is no way to really know what a student knows. There's no way to really know what another human knows, or how they see things, or whether they understand what they've been taught.

– Me

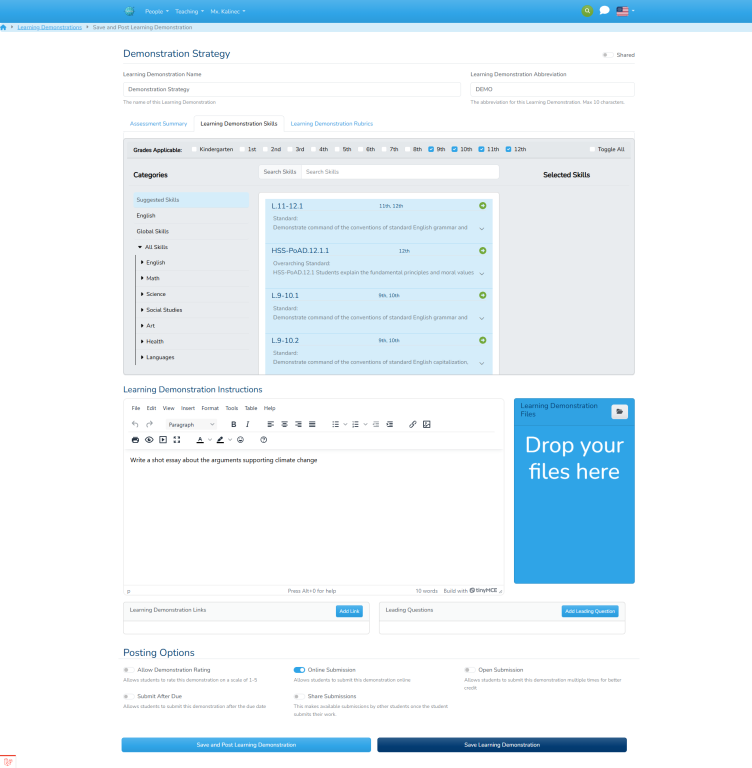

Think about everything that could possibly go into assignments. There's the name, of course, and the directions of what you will have to do for this assignment. I would usually call this the “assignment description”, but going with the idea that people (especially kids), tend to absorb and internalize words used to describe things, I prefer to call this field the “Learning Objective”. This is all part of what I call the assignments' Presentation Section, which has the purpose of presenting the assignment for the student. This section includes not just text, but any other material that the teacher might find useful for the student completing the assignment, such as files, URLs, videos, images, etc.

The next section of the assignment, which I don't think is as heavily developed by teachers, is the Assessment Section. Most people believe that this is where you would put in the weights of the assignments, or attach it to some sort of criteria that you will be assessing, which it is, but it also needs to be much more. This is the place where teachers will tell us the two things I mentioned in my first assessment post:

- What is the relationship between the demonstration and the skill it is seeking to demonstrate

- What is the relationship of the learning demonstration and the overall success in class.

The first question is answer through teachers linking this assignment to a list of Skills that this assignment will be assessing, and adding optional weights to those relationships. The second one, however, requires that the class be set up with some sort of criteria that will determine how a final grade is calculated. While I'm not a big proponent of a “final grade” (too summative for me), I recognize that the world runs this way. The best way to arrive at this magical grade is to set up a structure of criteria that will determine the overall grade. I don't want to focus too much on this grade though, just the fact that it exists. However, it will also mean that Criteria will have to be defined ahead of time in order to be linkable. Each assignment will link to a single criteria.

The last section of the assignment is the metadata section, meaning the information about the assignment itself. This includes things like when it is posted, when it is due, and who it is posted to. One of the things to remember is that an assignment is not guaranteed to be assigned to the whole class, as this would violate the idea of formative assessment. Rather, it can be custom-assigned to as many or as few students as you want. The idea is that this system will help you identify students with weaknesses and allow you to assign extra chances for the student to demonstrate their learning.

Now it is important to understand that the Learning Demonstration is the artifact that the teacher builds as a model for the student to demonstrate learning. Once the teacher is done building and assigns this Learning Demonstration to a student or multiple students, it becomes a Learning Demonstration Opportunity. The nomenclature is intentional; It tells the student that this is not an assignment, but rather an opportunity for them to demonstrate to me, the teacher, that they have learned something. I will give them an official list of what they need to prove to me that they learned, and it will go in a “category” (criteria) grade that will determine the over all grade. It will also show that you are getting experience in a Skill and the skill level will give a visual representation of how far they've come.

Once the student is given a Learning Demonstration Opportunity, they will work and be able to communicate about this opportunity through chats and communications that specifically revolve around that opportunity. This means that they student will be able to go into that container and post questions to the teacher or other students. Once the student is done, they will submit their Demonstrations to the teacher for assessment.

The Learning Demonstration Template

We do not want to have to re-create Learning Demonstrations over and over. Teachers will not stand for that and they shouldn't have to. The goal would be that teacher can save these Learning Demonstrations as “templates” that they can re-use in the future. For this use case, we create a Learning Demonstration Template that can be created through its own editor, or created via posting a regular Learning Demonstration and saving it as a template, or even to go back on a previous posted LD and saving that one as a template. A template will be a copy of the LD, but without any of the connection to classes. As such, I will be creating template along-side demonstrations so the ability is always tied in. There is be an optional “link-back” connection to the template if the LD was created by a template.

All templates will also have the option to be “Shareable”, which will include it in a list of other shared Learning Demonstrations that have also been shared. Teachers may then import this Learning Demonstrations into their templates and use them. It should be noted that attribution will be given to the templates, and people will not be able to re-share templates that were created based on someone else's template.

Throughout the next sections, I will be talking about Learning Demonstrations, but I will actually be building the Templates instead, since the code should be identical in a lot of places. But enough philosophy, let's get some code done. I'll be breaking down the code into three different sections: Presentation, Assessment, and Metadata. After I describe each one, I'll go over how it is all put together from a teacher perspective and what objects and events are generated.

The Presentation Section

This is where the teacher describes the Learning Objective for this Learning Demonstration. The idea in this section is to give the teacher as many choices as possible to provide information to the students. We live in an interconnected world where we're bombarded by different kinds of media in different kinds of formats and we must allow for the same communication mediums to the students we're trying to reach.

We will have some basic fields, of course. This is all stored in the Model App\Models\SubjectMatter\Learning\LearningDemonstration that is persisted to the database with the following properties:

name: simple name property, will be shown in big letters as the title.abbr: 10-char-max abbreviation that will be used in places with little space. I vacillate whether 10 is the right number, but it will do.learning_objective: A HTML-based text field that the teacher will use to communicate the objective of this Learning Demonstration. It will leverage theTextEditorLivewire Component with the Documents Integration.

We will also be leveraging our Integrations by giving it the App\Interfaces\Fileable interface and it's own storage key, WorkStoragesInstances::LearningDemonstrationWork. We will also be adding the storage key WorkStoragesInstances::LDOpportunityWork for the student submissions later on. It should be interesting to note that with the combined inputs of the text editor (with images) and a raw file storage, we cover 99% of all the content teachers will need. It will depend on what kind of files the system allows to upload, but that restriction will there regardless. The only real thing that we might want to add are a list of links that might be appropriate to the LD. However, it's been my experience (YMMV) that it is hardly used, since you can just add the link information to the description of the assignment.

So is it worth adding a URL section? on the one hand, we don't need it, as it can be done in the description, most teachers don't use it, and it's not really relevant on worksheets or anything like that, since we can just use the file storage. On the other hand, it can add a sense of organization, where more organized teachers can use it as a reference section that they can attach important information. It's also really cheap, as it can be a simple JSON field in the LD itself instead of it's own table. I decided to go for the extra customization and made it the JSON field.

Similar to the URL section is a section that I loved in my previous systems, which are follow up questions. The previous information entered for the presentation has been unstructured, meaning that while there are designated spaces to put certain content in, the type of content is up to the teacher to design and create. They can use any kinds of files, or URLs and tailor their objective in whichever way they want. Follow-up questions are structured content that allows the teacher to ask questions in a pre-defined order with a simple question-answer format. They're meant to serve as a way to reflect on the assignment given and will be very simple textarea inputs. The questions will be simple and will not be stored in the database. Instead, they'll be stored as a json field in the demonstration, and the answers in the opportunity itself.

The Assessment Section

The fun part! The teacher will be responsible for linking to correct skills that this Demonstration is trying to demonstrate. In theory, the teacher will have complete access to all skills in the system from all levels and course. However, giving this unfettered access to teachers (or anyone, really) is overwhelming and they don't know where to look. We need to be able to shrink the pool of suggestions to a lower number. There are a few ways we can do this; The most obvious way, at least for Knowledge Skills is to match the subject that this class belongs to. We can also see the range of levels that the class has, and cross-reference with the level attached to the Skills.

The ideal way would be for “someone” to assign the correct Skills to every course in the system, thus making them available. This can be…onerous. The other possibility is to allow each teacher to select what they think they will need into a “suggestion” or “curriculum” list that they can access through the year. After the first year, we can use these suggestions for people who've never taught that class before. Finally, there might be a way to let AI determine what skills it should use based on what the teacher has entered. The suggestions should also mark skills that students in the class might be lacking overall based on previous skill assessments.

Once the suggestions are loaded, the teacher has free reign over which skill they would like to assess. I will not make a determination on how many skills a demonstration should seek to assess at once, there are quite a few opinions on that and I don't feel qualified to make decisions based on it. So I will build my system open to any number and let the administration/faculty beware! However, based on my limited view of things, I could see the idea of perhaps assessing on a single skill, but also assessing skill reinforcement. What I mean by this is that the teacher should focus on assessing a new, single Skill per demonstration, but also selecting skills that the student has already been assessed on in order to determine growth. The teacher need not mark these, but rather the system should know which are new skills and which one are reinforced skills and present them that way.

Once the user has selected the Skills they will be assessing, the rubrics are generated and offered to the user so they can disable the criteria that they will not be assessing on. The idea is that, depending on how a skill is built and specially if a skill is multi-year, there might be criterion in the rubric that the student is not being assessed on yet, so the teacher will have the option of removing it completely. The result of this decision is then presented front and center to serve as a goal for what the student must demonstrate. The user will also be able to assign weights to each of the rubric items, either individually, as a group by skills, and as a whole. This will be used to determine the overall numerical-based grade (the least important part, in my opinion, but a necessary one in the culture we live in) that will server as the “grade” the student gets.

The Class Assessment Strategy

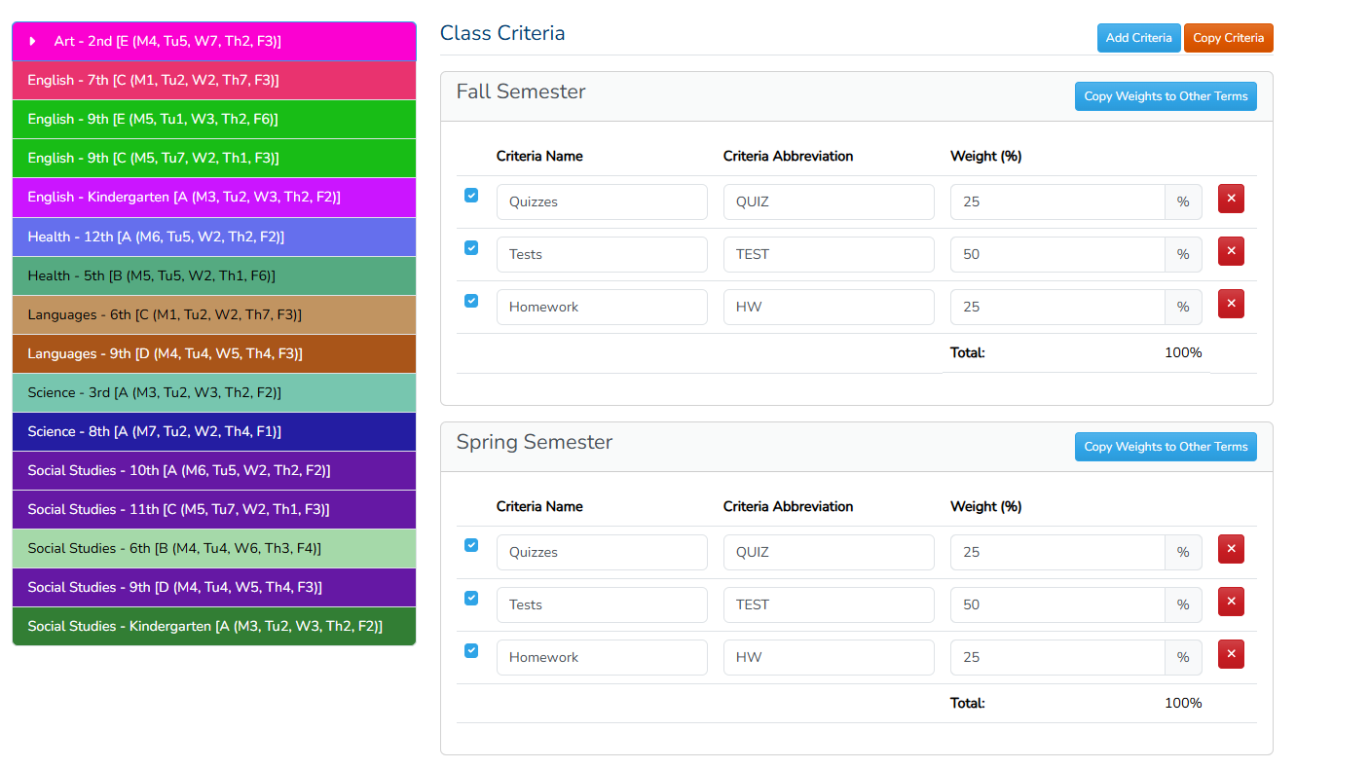

At this point we have a way to assess the Demonstration and we can even get a grade for it, but where should that grade go? The answer is the teacher-defined structure, ClassCriteria. The Class Criteria is the relationship between a type of demonstration, such as a quiz, or homework, etc. and overall grade of the class. The overall grade of the class is a global, non-specific indicator of how well you're demonstrating the material in class. This grade should be used as a threshold for flagging students who might be underperforming and give teachers a nudge to reinforce the material in which they're not demonstrating learning.

The first thing that must happen then, is that the teacher must have all their criteria set ahead of time. The criteria will be teacher-defined, so it will be not be something saved in the template, because it will not be something that can be copied to another user, as their criteria might differ. Teacher will often use the same criteria in all of their classes, since the grading methodology usually aligns to make things easier, so I will be defining a single Class Criteria in the context of a year. Every year a teacher will create (or import) the criteria for the year and they can decide whether it applies globally (through inheritance) or if a class overrides the default a uses a different set of criteria.

The Class Criteria must be set up in advance. In fact, there are probably a few things that should be set up in advance before a teacher is ready to start customizing their classes. To help with this, I will be adding a new field to the ClassSession object, setup_completed, which will be set to true once regular class-related activities can happen (such as posting a Learning Demonstration). Eventually, I will like to create a new-year-wizard of some kind that guides the teachers to setting up their classes, but we haven't built enough to put one together. Once that's done and the Learning Demonstration is linked to a Class Criteria, the user can still assign a weight as to how this demonstration is related to the criteria, whether it might be twice as important, for example.

The ClassCriteria will actually be a larger part of the ClassSession's AssessmentStrategy. Every ClassSession will contain an AssessmentStrategy as a self-contained class (not persisted to its own table), which will determine how each of the StudentRecords will be assessed in that class. The bulk of this will be a link between the teacher's yearly ClassCriteria and the ClassSession, called the ClassSessionCriteria that specifies a weight (percentage, but any weight will do) of how it relates to the overall grade. The AssessmentStrategy will also contain a calculation method, which will tell the strategy how to calculate the criteria grade and the overall grade. There are multiple strategies that might work depending on how the teacher chooses to calculate their gradebook and demonstrations. Some that I can think of:

- Percent: All the demonstrations will be calculated as percentages, which will then calculate to an overall criteria percent, which will then calculate to an overall percent.

- Total Points: Add up all the assignment points, regardless of criteria and calculate a percent; That's the final grade

- Criteria Points: All points are add per criteria, transformed into a percent, then we get a total percent for the overall grade.

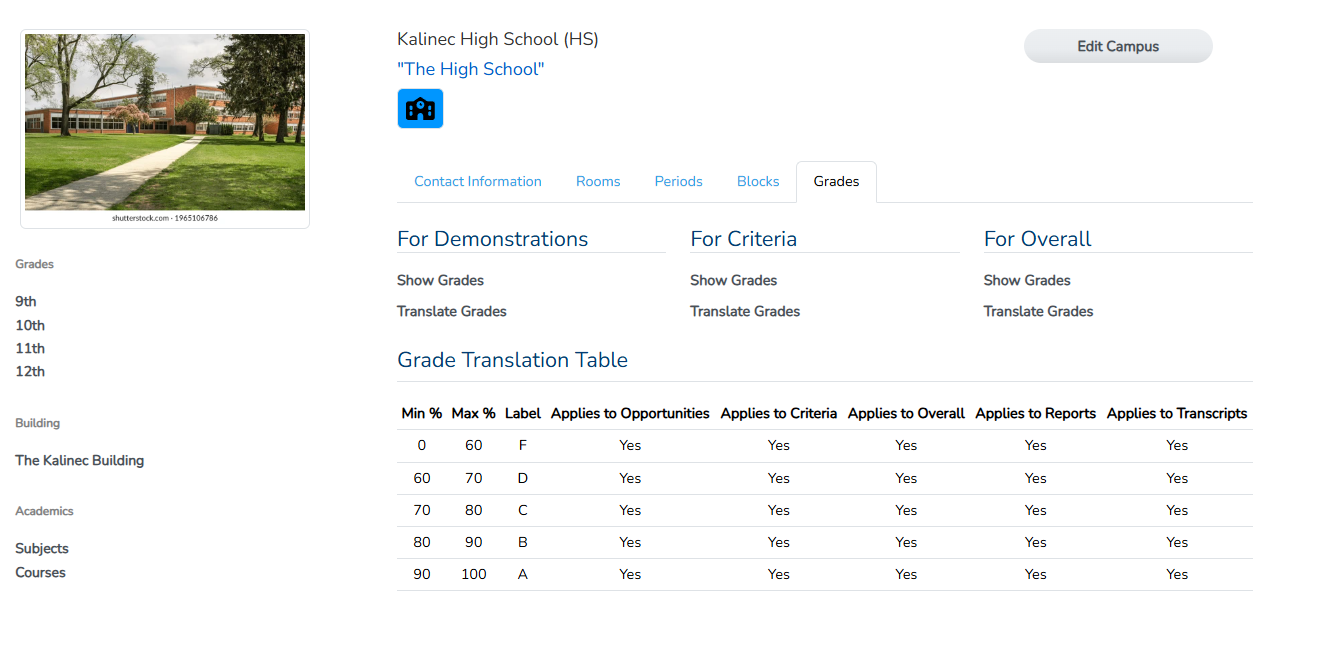

Finally, the AssessmentStrategy will also have a GradeTranslationSchema which will actually be set up by the administration. The purpose of this schema is to “translate” grades that are numerical (specifically, percent) into something that school wants to display. This is not to mean that you can't decide to show them as percent, but you can also choose to show them in other ways, such as letters, or some other designation. This will be decided globally on a per-campus level.

The Metadata Section

One of the main things to remember is that it should be rare that we actually purge a user from the system. As a general rule, we'll soft delete them but keep them around so that can keep the links around for historical purposes. In the case where we do purge them, we make sure that the data is not lost by selecting null on any link to any person record. In this case, the Learning Demonstration will be directly linked to the teacher that created it.

We will also be linking to some sort of subject matter. For the templates, we will be pointing to a subject rather than a course. This is mainly because courses change a lot, while subjects usually stay. We will be auto-suggesting names that will include the course name so that we can use some sorting/filtering mechanism. We will also make it nullable, so that if, for some reason, the whole subject is deleted, it wont' just poof. I will have to add a section for lost and found for orphaned templates.

Another hidden field that the demonstrations (but not the template) will have is the status. Status will be a wide-eye view of what stage of life the demonstration is at. It will be set as an enum and it will not be changeable by the user directly. Instead, it will change based on the actions of both teacher and students. Every time a demonstration enters a new stage of life, an event will be fired. This will make it possible for other services to intercept this and put in hooks. The lifecycle of the demonstration is as follows:

- Created: At this point the demonstration has been persisted to the database, either through a template or through a Learning Demonstration Strategy (more on this later)

- Posted: This is set when the teacher posts the demonstration to a class session (or multiple).

- Due: This is set when the due date is passed. If the demonstration is an online submission and depending on the setting, this might tell the student that they're late on their submission. On the other hand, if this is an in-person submission, then the status displayed to students might be “Being Assessed” or something like that.

- Assessed: The last stage of life, this happens when an Assessment Decision for each Learning Demonstration Opportunity has been entered.

The linking of assessment for the actual demonstrations, however, is more complicated. Each LearningDemonstration will be linked to a ClassSession, which will be a shortcut that we can save some default data. The actual linking to person will be the actual assignment whereas this will be a place that where can say “as a general rule, this assignment was posted to this on x and due on y”, but then the dates that actually matter are the ones in the Learning Demonstration Opportunity. So there will be Many-to-Many relationship between the LearningDemonstration model and the ClassSession model that will store in the pivot table default values for that class, such as posted_on, due_on, etc. This, however, will be created at posting time.

This section will also have a lot of options for the teacher to decide on. For now, I've decided on the following options available for this release:

allow_rating: This allows the students to rate the Learning Description between 1 and 5 stars. Fun for the students and the teacher can get meaningful feedback with something this simple. All ratings are logged.online_submission: Allows the assignment to be submitted online. On by default!open_submission: Only available ifonline_submissionis on. This will allow the student to re-submit the Learning Demonstrations as many times as they wish, so long as it's by the due date.submit_after_due: Only available ifonline_submissionis on. This allows the students to submit the assignment past the due date.share_submissions: Only available ifonline_submissionis on. This allows students who submit work to see the work submitted by other students.

The last piece of metadata, which is also probably one of the most important ones, but one that I will not be fully developing yet, is the demonstration_type property. There will be multiple types of DemonstrationTypes and each one will have to inherit a different class with different options. As I said, it is not something I will be fully developing yet, but it is something that I will leave space for and that I will plan ahead by adding the className property to allow for Polymorphic Demonstrations. At this point I will only define a “standard” demonstrations (of type DemonstrationTypes::STANDARD) but I'm already foreseeing the need for a few other types, such as:

- Recurring Demonstrations: Demonstrations that happen in a schedule, such as journal checks, or daily quizzes.

- Peer Review Demonstrations: Students are given documents (in some cases written by other students) and asked to critique them.

- Automated Assessment: Automated quizzes or test, or just questions.

- Workgroup Demonstration: A group assignment where the teacher can split people into groups and the work they do is tracked.

I would also like to expand the idea of integrators in this system and allow for a Demonstration Type Integration Service that will add extra types of demonstrations. For this reason, DemonstrationTypes will be a class instead of an enum, in order to allow for the possibility of registering more types during boot times. This means that the new type might be completely different interface than a standard demonstration, so the creator must reflect the possibilities. Regardless of the type, however, the “skeleton” of a demonstration that we've defined here must be inherited, as it's how it will interface with the system.

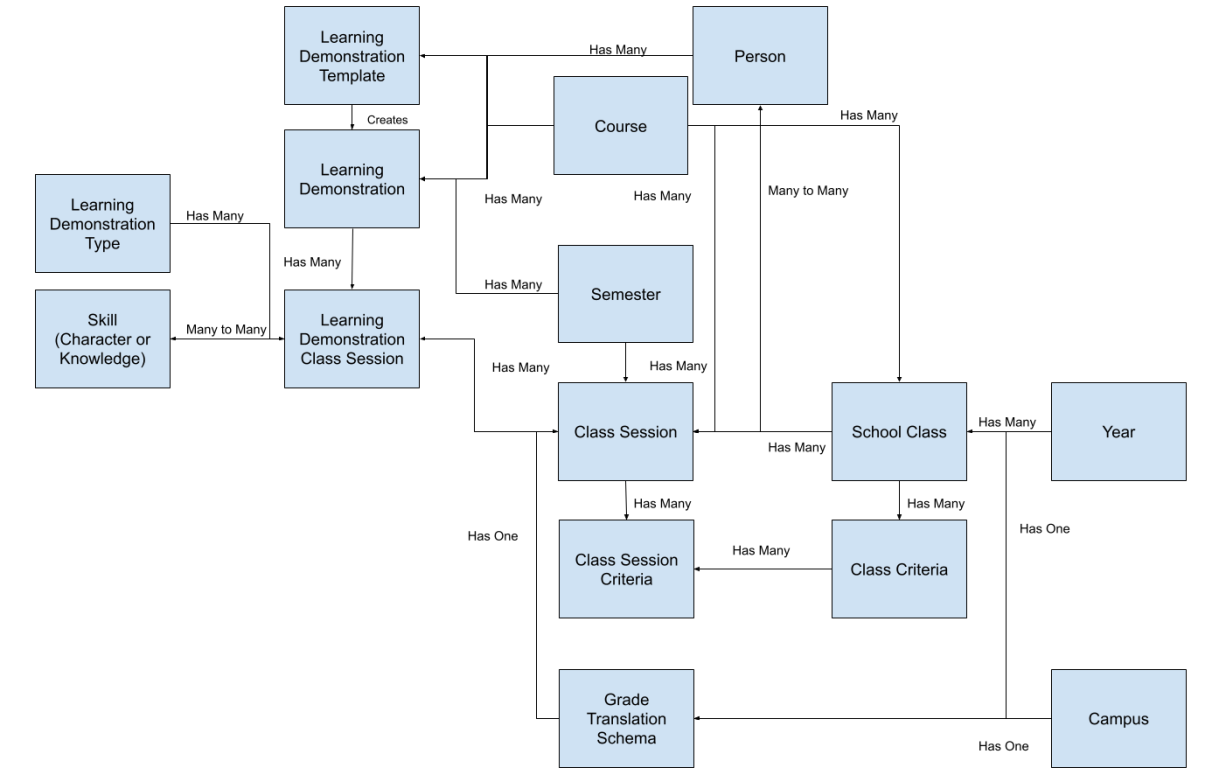

Putting all of this together, this is what the database diagram looks like

The class diagram should look similar, with maybe a few extra structures added. So, now that we have the structure, let's build our first template. This will let think about how a full demonstration will be built and it will give me a jump off point where I can create a new Learning Demonstration directly from a template.

Creating a Learning Demonstration (Template)

Before jumping into the creating, I needed to make some of the base classes and fill them in with data. The two main ones that came to mind are the GradeTranslaionSchema and the ClassCriteria systems. The idea behind the GradeTranslaionSchema is to provide the administration the tools to decide how grades are communicated to students and parents. Teachers and Staff will get access to the raw percentages, but that's not necessarily what we would like to show parents, specially at the lower grades. We may also want to assign different grades to display to students and other grades for the transcripts, since they might require more “traditional” grades. Thus, the purpose of the GradeTranslaionSchema is to allow the administration not only to define whether grades should be shown, but also if they should be translated to a “pretty” grade, instead of showing raw percentages. This is also Campus-Dependent, as different campuses will probably like different translations. It looks like this.

Next up is the ClassCriteria system. This system is faculty-only and allows them to decide their criteria of how their class will actually be assessed. Now, criteria are just labels that we apply to weights that we will use to caculate an overall grade. The actual ClassCriteria object is attached to the SchoolClass object, a 99% of the time, the criteria and weight will be the same for all the ClassSessions beloging to the SchoolClass. We will allow the possibility for different ones, however, but they will still be attached to the SchoolClass object. As such, faculy now have their own “Teaching” menu, where I will attach shortcuts to the the class-building subsystem. There is a new item there labeled “Class Criteria” which will lead them to a Livewire component that is made to manage all their criteria for all their classes, allowing them to define them, copy them, import them, etc.

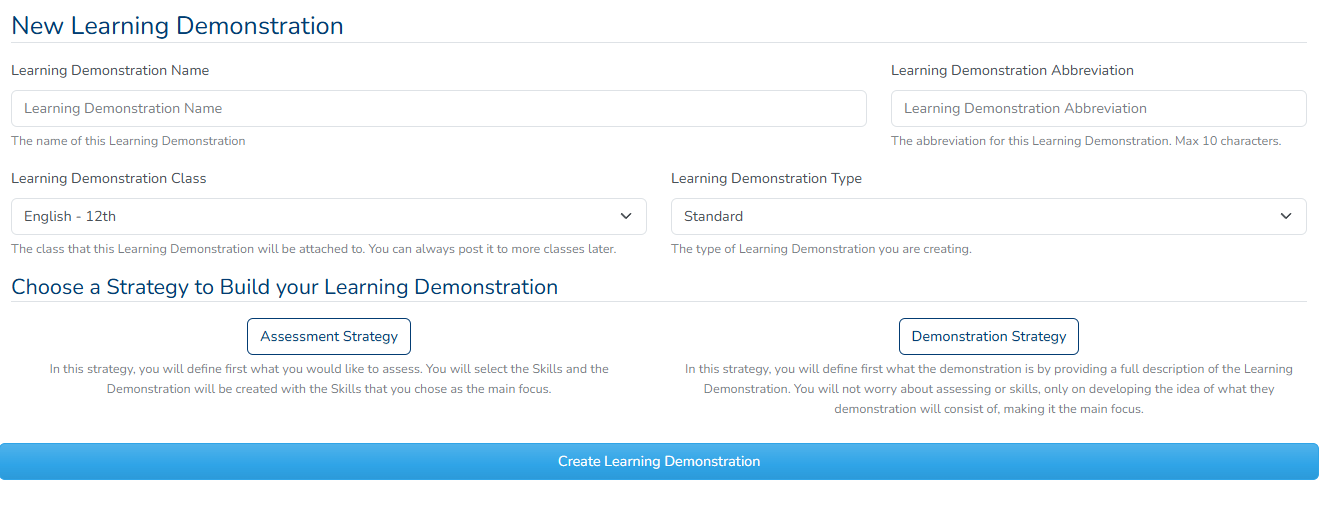

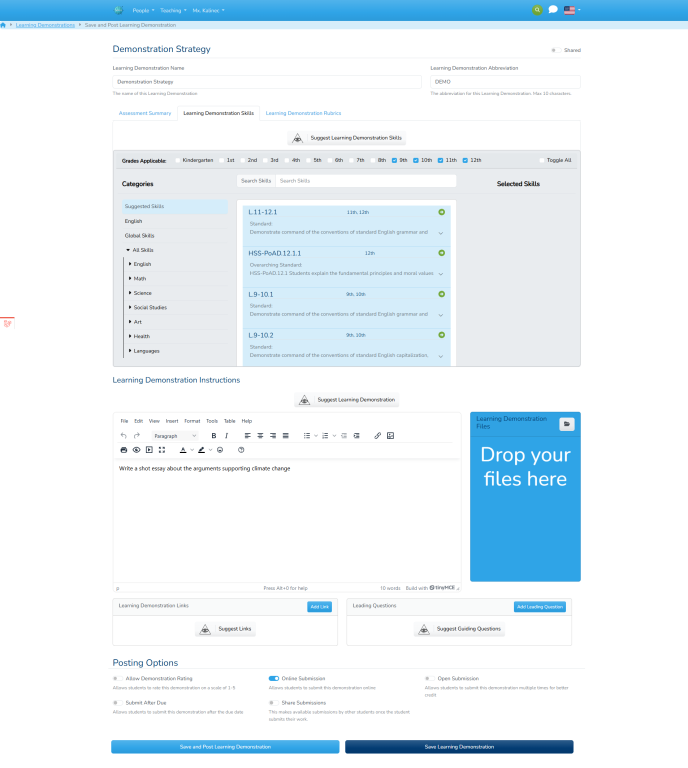

We're now finally ready to create Learning Demonstrations! This is where a lot of the work for teachers are going to come in. The purpose of this system is to make assessment easier for teacher, but will also be asking teachers to create more thoughtful assignments that will actually put learning and assessing in the forefront. For this reason, actually creating Learning Demonstrations will be more complicated than the traditional method of creating assignments. We will be leveraging all the integrations, AI, and other methods that we have to lessen the impact of the complexity of creating a Learning Demonstration, but we will be asking faculty to think deeply about assignments and why they are assigning them.

There are also certain things that we can't create until we create the skeleton of the Learning Demonstration. For example, attaching files to something requires that something to be instantiated. So creating a Learning Demonstration will have to happen in two stages: the first we we create the Learning Demonstration Skeleton, and the second where we actually fill in the skeleton with details. Behind the scenes, we will be starting a the creation of a Learning Demonstration (or a template, for that matter) by asking the faculty to use a Learning Strategy to begin creating the demonstration. Now, a Learning Strategy is not a code-thing, it's a name that give that describes how a faculty member will approach building a demonstration. The way I envision (and this might change) is that they will have two strategies to pick:

- The Assessment Strategy: In this strategy, the faculty will define first what they would like to assess. They will select the Skills from a chooser of some sort and they will create the demonstration with the Skill that they will be assessing as the main focus.

- The Demonstration Strategy: In this strategy, the faculty will define first what the demonstration is by providing a full description of the Learning Demonstration. They will not worry about assessing or skills, only on developing the idea of what they demonstration will consist of, making it the main focus when completing the skeleton.

Along with these strategies, we will be asking the faculty to fill out some fields that will need to make the skeleton. Namely:

- The type of demonstration. Can only be standard for now.

- The name of the demonstration

- The course that this demonstration will be attached to

- The abbreviation of the demonstration

Once this is selected, the option will be given to create a demonstration using the provided settings, which will lead to the editor for the newly-instantiated LearningDemonstration.

Adding AI

With the work in AI done in the previous build with Skills, I decided that I wanted to add a lot more AI to see how it works. It would also give me more exposure to how AI works, so I decided that I would like to add the capabilities to creating the Learning Demonstration. This is where I ran into my first problem with my existing structure. My existing structure, would generate a prompt for a model and a user, so every user could have their own “custom” prompt on a per-skill basis. If I were to add the Learning Demonstrations to them, the amount of prompts would simply blow up the database. Plus, most of the prompts would probably end up the same, only with different data. There was also the fact that I didn't want a single prompt for the whole Learning Demonstration. Instead, I wanted to break up the AI queries into individual, atomic prompts that would only be responsible for certain aspects of the Demonstrations. Specifically, I decided on the following AI prompts:

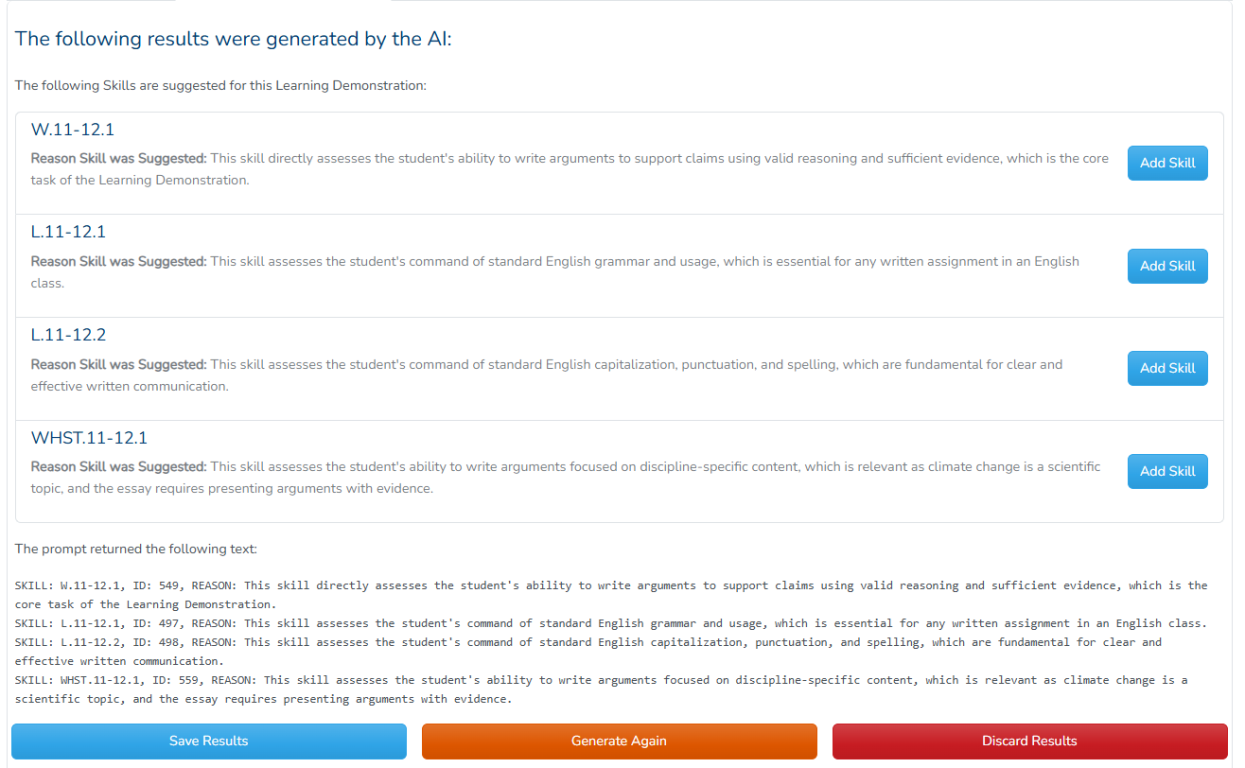

- Skills Prompt - This prompt would take information from the description and suggest the correct Skills to attach to the demonstration.

- Learning Objective Prompt - This prompt would return a learning objective based on the Skills and documents attached to the Demonstration.

- Rubrics Prompt - This prompt would suggest which of the criteria to use to assess the Demonstration

- Links Prompt - This prompt will suggest useful links as resources for the students to use.

- Leading Questions Prompt - This prompt will suggest good leading questions for the Demonstration.

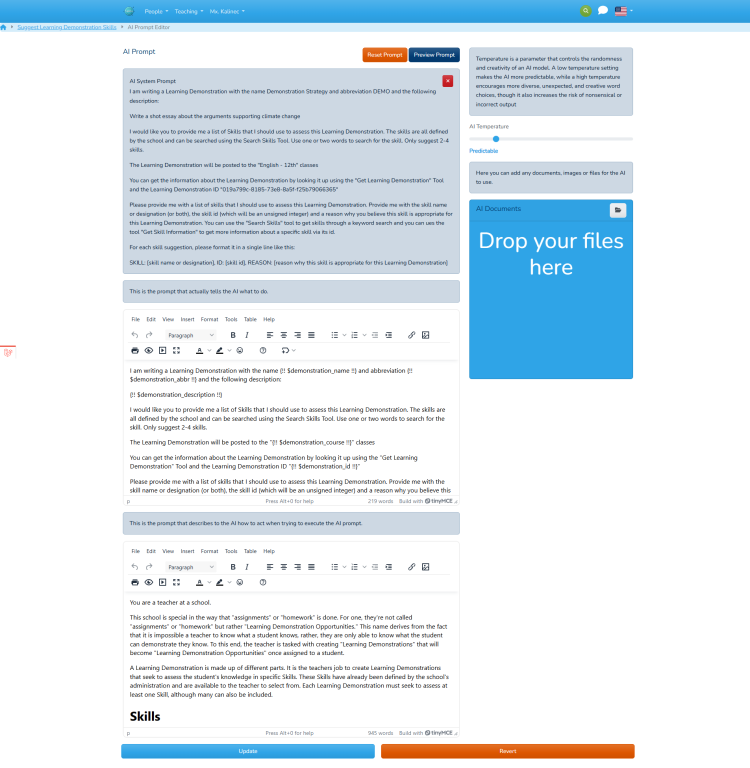

So now I had different “properties” that I could ask AI about, each concentrating on a different aspect of the LearningDemonstration model. Each of these properties should have a “generic” prompt with variables that could be fed into it with actual data. I decided to leverage the work that I did with the Email System and used the tokens system that I built for TinyMce to allow users to use these variables. I then switched the model so there would be no more “default” models, rather an AI prompt would be created for every user for every model-property in the system. This would reduce the size of the table by a large factor and would allow customization at a global level.

For example, when editing a prompt for the Learning Demonstration for the skills, the tokens $demonstration_description, $demonstration_course, $demonstration_id, $demonstration_name, $demonstration_abbr, $demonstration_skills, $demonstration_links, and $demonstration_questions are filled in by the system before sending the prompt out. So if a user is editing a prompt for the Skills property, the editor will like this:

Now, users can add their own tokens and modify the prompt for all Learning Demonstration Skills. There is also a preview to see what the prompt would look like with the object you're looking at. Because the prompt is more generic though, I had to add special instructions about how to format the results so that my code can read it. For this, I made it so that important instructions are un-editable by the editor. This gives users a place to fill out the prompt with their own instructions, while keeping the information the system needs to process the retrieved data correctly.

This is where I ran into my second problem, the amount and quality of data that I can give the system. Essentially, while the tokens worked, there is a limitation no how much data I can give the system. Take, for example, the token $demonstration_skills, which prints out the all the Skills that this Demonstration is attached to. With the token, I can only print out a line with a list of all the skills, but that's not useful, since the AI will also need the skill description, grades attached, etc. Not only that, but if the AI needs to suggest appropriate Skills, then the AI needs to be able to actually search our Skills and read them and get more information about them. I needed to make AI aware of our data, this is the reason why I had to build an MCP server for the AI to use.

An AI Tools Server

Earlier this year, AI work has reached the idea of allowing AI to use tools. The idea behind tools is to provide AI a way to access information that it is not natively able to access. For example, AI has no native way of accessing a link from the web. It doesn't have a web browser, or really any access to search the web by itself, so we can provide a tool that will require a URL as an input and it would send AI a response with the website it is trying to access. The AI doesn't know how to access a link, but you can make it aware of the tool and the interface and AI will use the tool if it deems it appropriate.

A short time after tools were being developed, the idea for an MCP server was borne. MCP stands for Model Context Protocol, and it is a way for AI to access a server where tools can be made accessible to the AI. One can build a server with a wide array of tools and connect the AI to make it aware of all the tools that are hosted in that server. This means that the AI can access your data, in a controlled way, and make determinations of what the output should be.

Now, there are many issues with these servers, mainly based out of the fact that the standard was released only about a year ago. One of the main issues with it is that AI agents (not all, but most), are not yet able to provide structured output while using tools. OpenAI is just now working on it, while agents like Gemini or Anthropic don't have anything in place for it. Structured output is the best way to output data, since it come in a machine-readable interface that makes it easy for software to extract the data. Since this was not an option, I coded it so that the prompt would specify the format of the output, made that section of the prompt un-editable, and used that form to pass the data. The results were really good, with my system able to read the data and transform it into machine-code-data with no errors.

Lucky for me, Laravel released its first version of their MCP server, so I was able to use this to create my own MCP server and tied it to the Gemini integration. I built my first server in this release with the following tools:

- Follow Links Tool - Allows the AI to “follow a link” by passing it the URL of the link and the tool will return the HTML page served.

- Get Learning Demonstration Tool - Allow the AI to get the full description of a Learning Demonstration (except for files, as they are not supported yet) and any skills attached to it, as well as all posting parameter. It requires the ID of the demonstration.

- Get Skill Information Tool - Allows the AI to get the information about a Skill based on the ID.

- Search Skills Tool - Allows AI to search for skills based on a one or more keywords.

Putting it together for the Skill property it looks like this:

Looking behind the scenes though, this is what the AI goes through:

[2025-11-13 11:22:08] dev.INFO: ************************************************** NEW AI CALL **************************************************

[2025-11-13 11:22:08] dev.INFO: Called Prompt: App\Models\SubjectMatter\Learning\LearningDemonstrationTemplate (id: 1) with property: skills and model gemini-2.5-flash

[2025-11-13 11:22:08] dev.INFO: Tools: Array

(

[0] => Name: relay__local__follow-url Description: This tool will return the contents of the URL provided.

[1] => Name: relay__local__search-skills Description: Search for Skills in the school by keyword.

[2] => Name: relay__local__get-skill-information Description: This tool gets the information about a Skill in the system given a skill ID.

[3] => Name: relay__local__get-learning-demonstration Description: This tool gets the information about a Learning Demonstration in the system given an ID.

)

[2025-11-13 11:22:08] dev.INFO: Tool Results: 0

[2025-11-13 11:22:08] dev.INFO: Tool Results: 0

[2025-11-13 11:22:08] dev.INFO: Step finish reason: ToolCalls

[2025-11-13 11:22:08] dev.INFO: Tools called: 1

[2025-11-13 11:22:08] dev.INFO: Step finish reason: ToolCalls

[2025-11-13 11:22:08] dev.INFO: Tools called: 1

[2025-11-13 11:22:08] dev.INFO: Step finish reason: ToolCalls

[2025-11-13 11:22:08] dev.INFO: Tools called: 1

[2025-11-13 11:22:08] dev.INFO: Step finish reason: Stop

[2025-11-13 11:22:08] dev.INFO: Results: SKILL: W.11-12.1, ID: 549, REASON: This skill directly assesses the student's ability to write arguments to support claims using valid reasoning and sufficient evidence, which is the core task of the Learning Demonstration.

SKILL: L.11-12.1, ID: 497, REASON: This skill assesses the student's command of standard English grammar and usage, which is essential for any written assignment in an English class.

SKILL: L.11-12.2, ID: 498, REASON: This skill assesses the student's command of standard English capitalization, punctuation, and spelling, which are fundamental for clear and effective written communication.

SKILL: WHST.11-12.1, ID: 559, REASON: This skill assesses the student's ability to write arguments focused on discipline-specific content, which is relevant as climate change is a scientific topic, and the essay requires presenting arguments with evidence.

[2025-11-13 11:22:08] dev.INFO: ************************************************** END AI CALL **************************************************

Here, you can see the tools being exposed by the server, as well as the tool calls that were executed. I then went back and added all the properties to the Learning Demonstration models and linked prompts to them so that they can be used in Demonstrations.

Posting Opportunities

I decided to stop at the ability to create, edit, and list all Learning Demonstrations. I did not yet add the ability to post them, assign them to classes/students or assessing them. I have been working on a limited basis on this project, so this release was really slow in comparison to my recent ones. Of course, the scope of this iteration was pretty large, so I'm proud of what I coded, just wish I had more time to do it.

I also added a new branch to git named “dev.kalinec.net”, which I will use to name and tag each major release (or Iteration) that I post to the website. This will allow me to load old versions based on a blog post and test things if they were broken. As such, this release is tagged in that branch as “Iteration1”. I will increate the iteration for every full release that is uploaded to the website that has a blog post attached.

My next step will be the ability to assign these demonstrations to classes/students and create a way for students to view these assignments and maybe even work on them/submit them. I doubt I will get the assessing part, but it will be next after it. I will also need to make a class landing page and I think I would like a way to integrate Google Classroom into the system using the Integration system I built 2 releases ago.

Finally, my amazing mentor, Joe Wise, has given me access to some documents and essays that he wrote about learning and the original “Learning Tool” that we built. I will be releasing some of these musings in between updates to further explain the origins of my idea, and expand on how I envision this new assessment methodology.

I will update when I'm done.